Corpus Visualizations, Macroanalysis, and Close Reading

In what follows, I present some more results and thoughts from my text mining[1]—what Franco Moretti calls “distant reading” and what Matthew L. Jockers calls “macroanalysis”—and the data visualizations that have come out of that.[2] In doing so, I want to reflect upon (and tentatively propose some claims about) a set of related issues about using these data and visualizations for analysis: how they help us to understand the corpus of texts behind them through new means of computation; how they represent knowledge hermeneutically, as constructed, mediating, interpretive structures; and how they necessarily require a return to close reading to reach humanistic conclusions about the text corpus. I suggest that being simultaneously aware of these facets of dealing with data and visualizations is closely linked with traditional literary methodologies, which promote close reading alongside critical theoretical discourses. The goal in returning to close reading in our analyses of text-mining, then, is to be aware of and engaged with the interpretive discourses embedded in them, and to return the results of digital methods to the critical eye of humanistic criticism.

Theories of Data and Visualizations

The first facet of considering data and visualizations that I want to address is that of remediation—the crossing of media boundaries that drives and lies at the heart of digital culture. Recent scholarship has sought to conceive of visualizations as not only the results of data but also interpretive in their basic structures, thus mediating through a particular type of representation that should not be taken at face value, as there is an inherent tension between humanistic study and epistemological assumptions about scientific empiricism.[3] Johanna Drucker acknowledges this tension by discussing the notion of “situatedness” for the scholar:

By situatedness, I am gesturing toward the principle that humanistic expression is always observer dependent. A text is produced by a reading; it exists within the hermeneutic circle of production. Scientific knowledge is no different, of course, except that its aims are toward a consensus of repeatable results that allow us to posit with a degree of certainty….[4]

The implication, then, is that visualizations should be read critically. As Drucker has suggested elsewhere, “Visual epistemology has to be conceived of as procedural, generative, emergent, as a co-dependent dynamic in which subjectivity and objectivity are related.”[5]

The second, related facet of considering data and visualizations that I want to address is the way that each act of macroanalysis necessarily prompts a return to close reading. As Trevor Owens points out, “data offers [sic] humanists the opportunity to manipulate or algorithmically derive or generate new artifacts, objects, and texts that we also can read and explore. For humanists, the results of information processing are open to the same kinds of hermeneutic exploration and interpretation as the original data.” In other words, “any attempt at distant reading results in a new artifact that we can also read closely.”[6] A similar line of thinking arises from exploring the implications of the results we gain from the type of macroanalysis promoted by Moretti and Jockers. Throughout his book, Jockers emphasizes that macroanalysis is not a replacement for close reading, but a helpful counterpoint to it. Even more, we need to come to terms with the fact that the interpretation of data generated from text-mining is in itself an act of close reading: to make sense of macroanalysis, humanists necessarily engage in exegesis on a micro-analytical level. Jockers even hints at this idea when he writes that network data from macroanalyses “demand that we follow every macroscale observation with a full-circle return to careful, sustained, close reading.”[7]

The two methods of approaching these data and visualizations that I propose—using digital tools for analyzing the text corpus as well as critically questioning the results—are not mutually exclusive. Instead, I suggest that such critical questioning should lead scholars to consider a continual process of moving between multiple perspectives of reading, including distant as well as close (macro- and micro-analysis), in order to glean productive analyses of texts.

Word Frequencies

In this section, I present the data used as the basis of the visualizations, focused on word frequencies and connections between the most commonly used words. In a previous post I already discussed my methods and some preliminary findings for the Latin Vulgate Judith and Hrabanus Maurus’ Commentarius in Iudith, so these findings form a follow-up.

First, some words about the text corpus. Together, the entire corpus of texts considered in this project contains 232,725 characters and 34,609 words. I have divided the entire corpus of texts (49 in all, available on the Omeka site, most of them with translations) into two separate, though connected, corpora, since I have found that this yields more meaningful results. The reason for this is that the Latin works make up such a significant amount of the total that it skews what we can see about the Old English texts. For the most part, the corpus is split by languages, one for Latin and the other for Old English, though the exception is mixed (macaronic?) glosses—which have been included in the Latin corpus since they do not generally contain extensive Old English lexical items. The results of splitting into two corpora are as follows: Latin corpus contains 199,233 characters and 29,411 words; the Old English corpus contains 33,492 characters and 5,198 words.

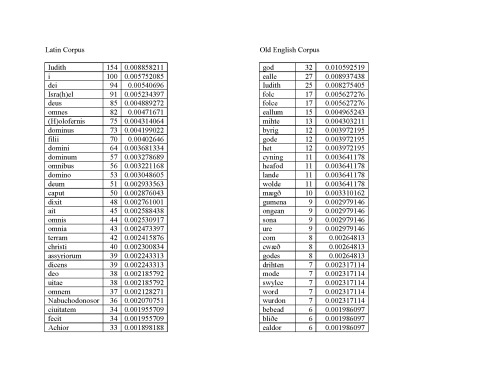

In the following word frequency tables are data for the 30 most frequent words (not lemmatized) in the Old English and Latin corpora, excepting stopwords; data include both numbers of instances as well as relative frequencies (percentages of the whole). On the methods I used to generate these data, as well as the Latin stopwords list, see the previous post; the Old English stopwords list that I created is based on frequency data for the entire Old English corpus.

Click to englarge. N.B. Because of typographical variations, I have combined the following frequencies for accuracy: israhel/israel; and olofernis/holofernis.

These frequency data may also be visually displayed in a graph, created in Tableau Public 8.0 (though you’ll have to click through to see the interactive graph): here.

I would like to note that even these data, as scientifically empirical as they appear–and as much as they create a sense of reliability of data analysis for the viewer–are constructed. For example, at the most basic level of my methods, the stopwords lists are themselves interpretive: the lexical items selected (or, more precisely, selected only to be omitted from analysis) were chosen based on linguistic assumptions about which specific parts of language are to be read as “significant” and “insignificant” for scrutiny. Yet even high-frequency, short function words may reveal meaning, as Moretti has demonstrated from tracing the roles of definite and indefinite articles across eighteenth- and nineteenth-century book titles.[8] Just as striking, upon reflection of this, is the fact that I have been guided by my own training in languages and linguistics; this is not empirical approach, but humanistic. There is, as Drucker has suggested,[9] a seeming tension between the humanistic and scientific assumptions inherent in these methods, but they also allow for productive analysis when used together.

Collocate Clusters

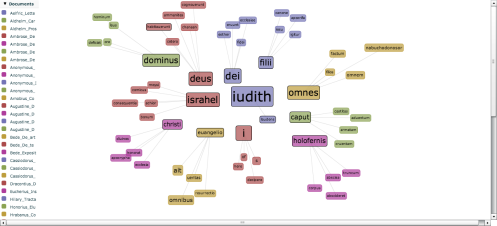

The series of visualizations to follow were created by uploading the Latin and Old English texts into the Links tool in the Voyant Tools suite, in order to create presentations of collocate clusters.[10] This tool uses statistical analysis to find the numbers of lexical collocates—sequences of words occurring together—and to present these occurrences as a network based on the frequencies of such occurrences. Thus, each lexical item is presented as a node (connecting point), with networks drawn between closely associated collocated words. For these visualizations, I have directed the Links tool to ignore stopwords, in order to render networks only of the most frequent terms in the corpus. In all of the visualizations below, the collocate clusters are presented with each node size representing the frequency of that lexical item in the corpus.

First, I present a visualization of the collocate clusters detected when the Latin text corpus was uploaded as individual files, for which each distinctive text and passage constituted an individual plain-text document.[11]

Collocate clusters in the Latin text corpus, with stops removed and nodes representing lexical frequencies. Click to enlarge.

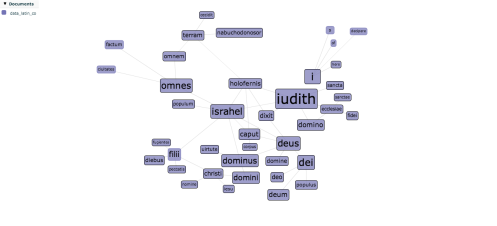

Second, I present a visualization of the collocate clusters detected when the Latin text corpus was uploaded as a single file, constituting every text compiled into one plain-text document.[12]

Collocate clusters in the Latin text corpus (as one file), with stops removed and nodes representing lexical frequencies. Click to enlarge.

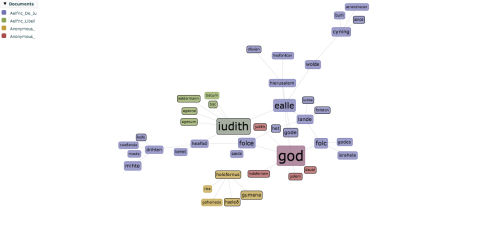

As can be seen, the connections between clusters across texts are more discernible in the second image (when the input is one file), allowing for a different type of analysis that emphasizes intertextuality even at a lexical level. The same issue is also apparent when comparing visualizations of the Old English text corpus, again uploaded as individual files and as a single file.[13]

Collocate clusters in the Old English text corpus, with stops removed and nodes representing lexical frequencies. Click to enlarge.

Collocate clusters in the Old English text corpus (as one file), with stops removed and nodes representing lexical frequencies. Click to enlarge.

It should also be apparent that the arrangements of nodes in these visualizations are significant—in both cases, I have arranged the nodes so that synonymous terms (e.g. deus, dei, dominus, domini, god, godes, etc.) may be viewed in proximity, to highlight the connections between those and other terms. These visualizations are further manipulable by changing node sizes (in the tool options) from representing lexical frequencies (as in these images) to representing numbers of associations each lexical item has to others. These arrangements, then, are not random, but purposeful and hermeneutic; these visualizations of data do present statistical associations between lexical items, but the arrangements of these clusters are presented as already processed through my own humanistic interpretations. While empirical, scientific approaches (on which many digital humanities endeavors rest) value quantifiable, verifiable, and repeatable results, these visualized collocate clusters hardly deliver significant results within these parameters. These claims are not meant to denigrate such visualizations or the work that goes into creating them, but to point out that, in presenting useful ways of seeing the corpus, they do so as interpretive, qualitative, and graphically constituted representations of data.[14]

If we step away from the epistemological assumptions of scientific inquiry, however, the long-standing humanistic tool of close reading offers a method of teasing out significance from these visualizations in ways that do not depend upon empirical notions of certainty.[15] In this way, the significance of these visualizations may be examined despite them being, or perhaps even because they are interpretive, qualitative, and graphically constituted. What can we say about these clusters?

The most obviously striking element is the confirmation of connections surrounding the notion of collective identity. The centrality of Israhel (and variants) is apparent from its common occurrence across the corpus—it is the fourth most frequent word, with 91 instances—and the collocate clusters only further emphasize this significance: it is associated directly with Iudith, words for the deity (deus, dominus, and their variants), as well as filii (children), populum (people), and omnes (all), the latter creating further indirect associations with ciuitates (cities), omnem (all), and (further out) terram (land). I mentioned the significance of Israelite identity in my previous post, since it is central to the biblical book of Judith, but it is just as important to see these connections upheld when the whole Latin corpus is examined. Furthermore, these same associations occur even in the Old English corpus: ealle (all) is directly associated with Iudith, god and gode (both for god), as well as lande (land), through which further connections are Iudea, folc (people), and (from folc) Israhela. One more set of text-mining results is relevant to these findings. When I analyzed the Latin corpus by using the List Word Pairs tool at TAPoR,[16] the results revealed that the two most frequent word pairs were filii Israhel (18 instances) and omnis populus (15 instances).

Two implications of these analyses may be followed. First, the results of macroanalysis, ranging from data about word frequencies and word pairs as well as visualizations of collocate clusters, are consistent. Yet, second, they also prompt interpretation that most benefits from contexts provided by a long tradition of humanistic study of the subject at hand. To modern scholars, the issue of collective identity is obviously key to the Israelite peoples, and no less so in the climate of anxiety during the late Second Temple period (c.200 BCE to 70 CE), when the Greeks, Egyptians, Romans, and various other middle eastern empires vied for control of their homeland. This is, in fact, thematically at the root of the biblical story of Judith, hence the connections I previously pointed out. Yet the clustering of Latin and Old English texts connected to the Vulgate Judith also show that medieval people continued to share this concern. In other words, medieval people—including the Anglo-Saxon authors who composed the Old English texts—were no less able to identify a key issue than modern scholars have been. This also raises questions about how and why Anglo-Saxons would have capitalized on Old Testament themes of collective identity, like Bede did in his Historia ecclesiastica when linking Anglo-Saxons to the Israelites as continuations of the chosen people of the Old Testament covenant. While this is not the place for exploring these issues, they do provide provocative avenues for further study that may fruitfully emerge from the critical engagement between digital tools and close reading the results through a humanistic lens.

[1] Data and visualizations referred to in this article may be viewed and downloaded at the open access GitHub repository for this project, uploaded on October 24, 2013, at https://github.com/brandonwhawk/studyingjudith.

[2] See, most recently, Franco Moretti, Distant Reading (London: Verso, 2013); and Matthew L. Jockers, Macroanalysis: Digital Methods and Literary History (Urbana, IL: U of Illinois P, 2013).

[3] See esp. essays included in the special issue of Poetess Archive Journal 2.1 (2010), http://journals.tdl.org/paj/index.php/paj/issue/view/8; Johanna Drucker, “Humanities Approaches to Graphical Display,” Digital Humanities Quarterly 5.1 (2011), http://www.digitalhumanities.org/dhq/vol/5/1/000091/000091.html; and “Humanistic Theory and Digital Scholarship,” Debates in the Digital Humanities, ed. Matthew K. Gold (Minneapolis, MN: U of Minnesota P, 2012), 85-95, and online at http://dhdebates.gc.cuny.edu/debates/text/34; as well as Laura Mandell, “How to Read a Literary Visualization: Network Effects in the Lake School of Romantic Poetry,” Digital Studies 3.2 (2012), http://www.digitalstudies.org/ojs/index.php/digital_studies/article/view/236; and Tanya Clement, “Distant Listening or Playing Visualizations Pleasantly with the Eyes and Ears,” Digital Studies 3.2 (2012), http://www.digitalstudies.org/ojs/index.php/digital_studies/article/view/228.

[4] “Humanistic Theory,” 91.

[5] “Graphesis,” Poetess Archive Journal 2.1 (2010), at http://journals.tdl.org/paj/index.php/paj/article/view/4.

[6] “Defining Data for Humanists: Text, Artifact, Information or Evidence?” Journal of Digital Humanities 1.1 (2011), http://journalofdigitalhumanities.org/1-1/defining-data-for-humanists-by-trevor-owens/.

[7] Macroanalysis, 168.

[8] Distant Reading, 179-210.

[9] “Humanistic Theory and Digital Scholarship.”

[10] Sinclair, Stéfan, and Geoffrey Rockwell, “Collocate Clusters,” Voyant, 2013, http://voyant-tools.org/tool/Links/.

[11] The Links tool with the Judith Latin corpus data already uploaded may be accessed here: http://voyant-tools.org/tool/Links/?corpus=1382452387493.8144&stopList=1382452853727ou.

[12] The Links tool with the Judith Latin corpus data (as a single file) already uploaded may be accessed here: http://voyant-tools.org/tool/Links/?corpus=1382487262714.8638&stopList=1382487412222tg.

[13] The Links tool with the Judith Old English corpus data already uploaded may be accessed here: http://voyant-tools.org/tool/Links/?corpus=1382463604321.8371&stopList=1382464120853uc. The Links tool with the Judith Old English corpus data (as a single file) already uploaded may be accessed here: http://voyant-tools.org/tool/Links/?corpus=1382488470390.1204&stopList=1382488599947fj.

[14] Cf. Drucker, “Humanities Approaches to Graphical Display.”

[15] Here I take my cues specifically from Drucker, “Humanistic Theory and Digital Scholarship.”

[16] Taporware Project, McMaster University, 2013, http://taporware.ualberta.ca/~taporware/betaTools/listwordpair.shtml. For the Latin corpus, there are 22,097 unique word pairs, with 29,432 word pairs in total; 17,889 word pairs occurred once and 2,990 word pairs occurred twice. With stops removed, there are 14,845 unique word pairs, and there are 17,407 word pairs in total; 12,843 word pairs occurred once and 1,670 word pairs occurred twice. Unfortunately, lack of support for Unicode (UTF-8) precludes using this tool effectively with Old English, due to special typographical characters (Æ/æ, Þ/þ, Ð/ð, Ƿ/ƿ). This is an instance that demonstrates that not all tools play nicely with medieval languages and literature.

Trackbacks & Pingbacks